Elixir Async Tasks Benchmark

EATBenchmark explores the execution time between synchronous and asynchronous HTTP request jobs. (5 mins read)

I needed a way to handle multiple HTTP requests while not doing so in a blocking manner. Initially, I was checking out how processes work and was having some fun spawning a ton of them while staring at Erlang’s observe GUI. Then I went on to take a look at how Elixir Task would help me in achieving similar results in an abstracted manner.

And so I tried writing some code.

urls

|> Enum.map(&Task.async(fn -> HTTPoison.get(&1) end))

|> Enum.map(&Task.await(&1, 30000))

We start a task for each of the HTTP requests and then wait on it.

Quoting from Michał Muskała’s post:

The reason why we are using

mapas compared tostream, is becausestreamis lazy, and starts processing the next element only after the current one passed through the whole pipeline. It would make tasks go one, by one through ourTask.asyncandTask.awaitturning them sequential! The exact opposite of what we’re trying to achieve.

To test that this actually works as expected, I tried comparing it with a batch of synchronous http requests.

urls

|> Enum.map(&HTTPoison.get(&1))

Borrowing Erlang’s timer module :timer.tc fn -> end, we could easily get how long each batch of requests took.

The results at this point of time revealed that both code took around the same time to execute, even for a large amount of HTTP requests. This is due to Hackney’s default connection pool of 50. We could easily increase it by defining the pool to a much larger size

:ok = :hackney_pool.start_pool(

:first_pool,

[timeout: 15000, max_connections: 1000]

)

before we start HTTPoison and its dependencies. More on this here.

Another problem with this test is that the first request usually takes a while more to which affects

the result of jobs with smaller number of requests. I tested this by swapping around the order of synchronous and asynchronous jobs.

This might be due to the initial DNS query. So we can just ignore the first HTTPoison.get(url).

With the above problems corrected, I managed to write a script to time how long the jobs compared to each other:

./tasks -u https://noncaching.url -m 60 -l 50

-u: url - provide a non-caching url which a reasonable pool size

-m: multiplier - the length of urls, size of jobs

-l: loop - how many times the same job is tests; results will be more accurate with higher loops

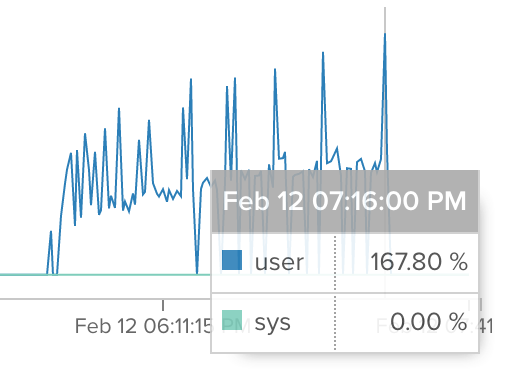

The findings are displayed below:

Loop: 1

Loop: 10

Loop: 50

I spun up the servers myself because I didn’t want to be accused of DOSing someone’s machine. And requests were indeed intensive for the tiny servers. :P

You can check out the escript here.